Table Of Contents

Claim

US President Joe Biden admitted to taking the Pakistan Army chief on board in a conspiracy to remove former Prime Minister Imran Khan from office in April 2022, according to an audio recording.

Fact

Soch Fact Check conducted rigorous deepfake tests and spoke with multiple experts in the relevant fields to ascertain that the audio of Biden’s alleged confession is not authentic and is most likely AI-generated. A lack of any credible reporting on the development raises further questions about its authenticity. That its release was perfectly timed just weeks before the 2024 US elections also lends credibility to the fact that the audio is fake.

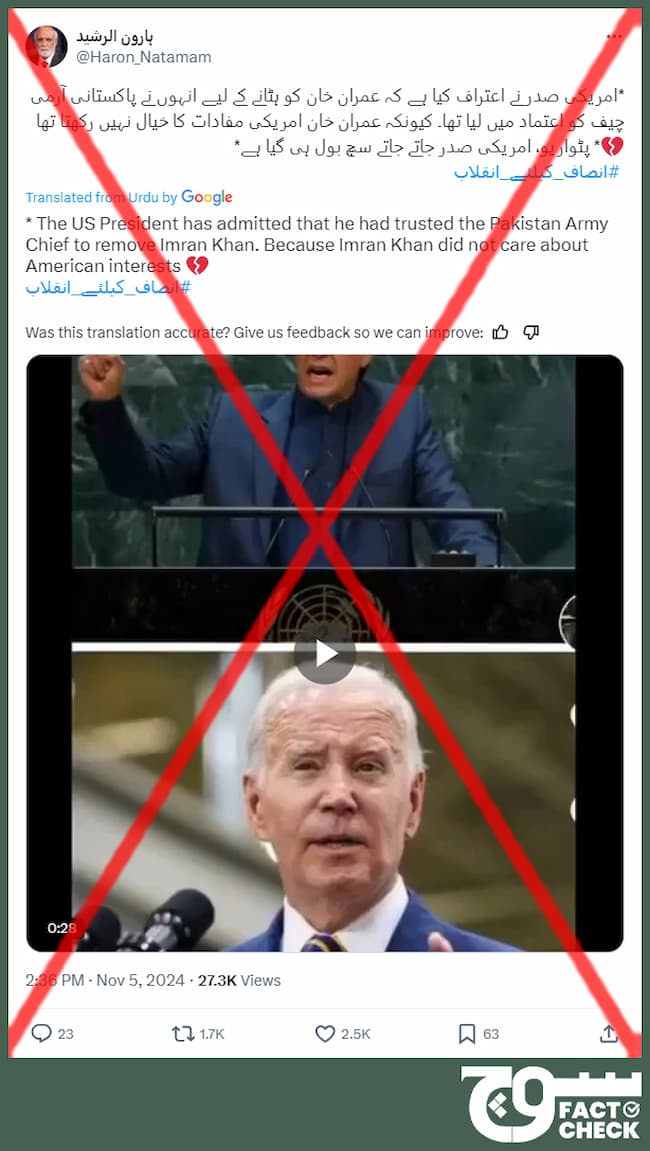

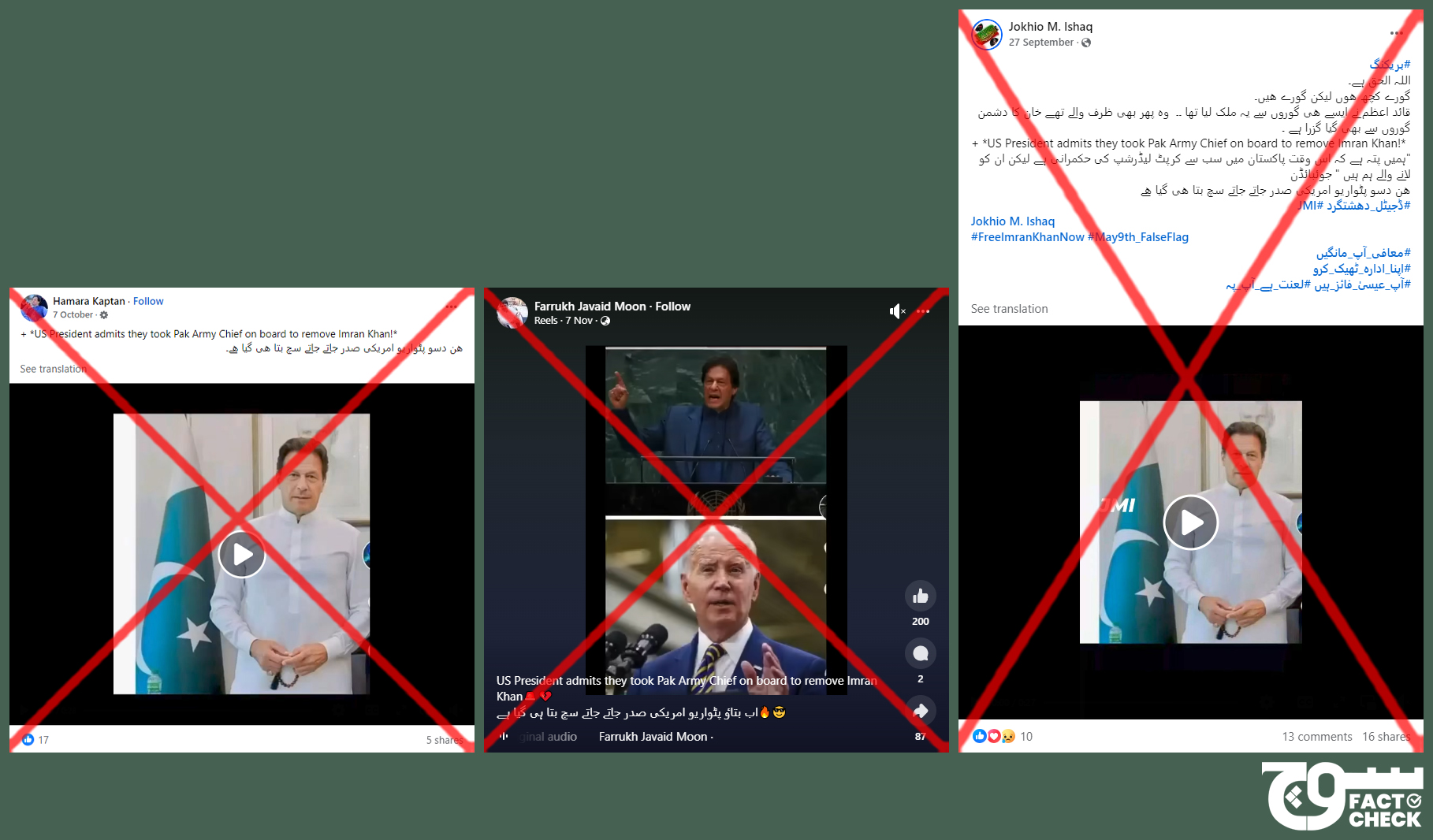

In September 2024, several posts emerged on Facebook containing the same video with an image of Pakistan’s former prime minister and founder of the Pakistan Tehreek-e-Insaf (PTI), Imran Khan, alongside a picture of outgoing US President Joe Biden.

The video features what appears to be Biden’s voice, claiming he conspired with Pakistan Army Chief Gen Syed Asim Munir to remove (archive) then-Prime Minister Khan through a no-confidence vote in 2022 — an allegation many, including PTI supporters, have dubbed the “regime change conspiracy” (archive).

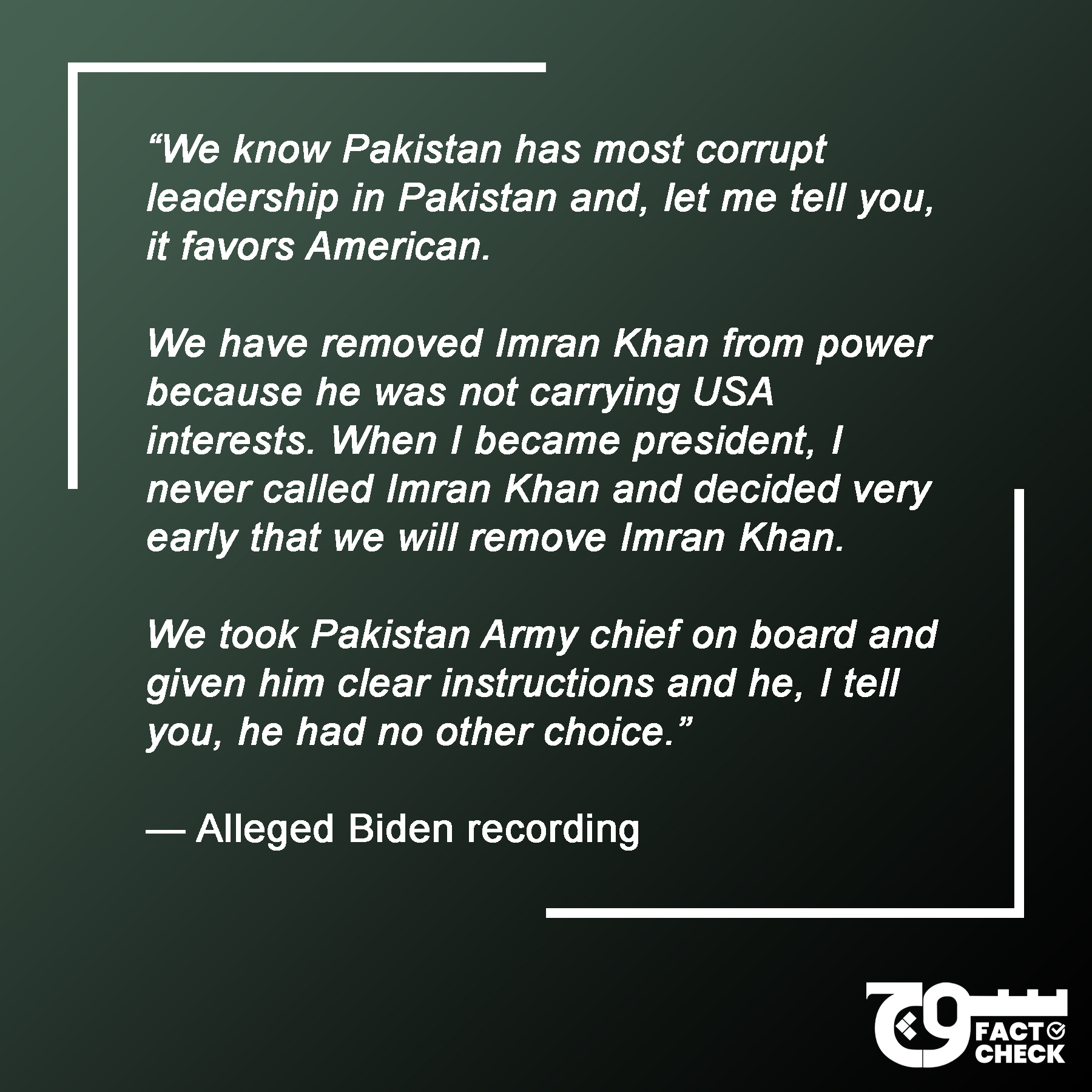

The alleged Biden recording states:

“We know Pakistan has most corrupt leadership in Pakistan and let me tell you it favours American. We have removed Imran Khan from power because he was not carrying USA interests. When I became president, I never called Imran Khan and decided very early that we will remove Imran Khan. We took Pakistan Army Chief on board and given him clear instructions and he, I tell you, he had no other choice.”

The Facebook post is accompanied by the following caption:

“+ *US President admits they took Pak Army Chief on board to remove Imran Khan!* ھن دسو پٹواریو امریکی صدر جاتے جاتے سچ بتا ھی گیا ھے۔

[What do you have to say now, patwariyon? The US president revealed the truth shortly before leaving.]”

“Patwariyon,” the plural of “Patwari,” is a term often used in a derogatory manner for supporters of the Pakistan Muslim League-Nawaz (PML-N), the political party currently in power. It is commonly employed by supporters of Khan, who is currently in jail over several cases brought against him after he was ousted.

The phrase “shortly before leaving” refers to the US presidential elections held on 5 November 2024. Biden announced (archive) on 21 July his decision “not to accept the nomination” and endorsed former Vice President Kamala Harris as the Democratic candidate. She thanked (archive) him for the endorsement, saying she was “honored” by his decision.

Trump emerged (archive) the winner to become the 47th president of the US, bagging the 270 electoral votes required to enter the Oval Office. Biden’s term officially ends “at noon ET” (archive) on 20 January 2025.

Soch Fact Check is not investigating allegations of any foreign country’s involvement in Khan’s removal from the PM Office but only the authenticity of the audio in the claim.

‘Regime change conspiracy’

The “regime change” conspiracy stems from allegations levelled by Khan that the US, Pakistani military, and Prime Minister Shehbaz Sharif conspired to have him removed. All three have denied (archive) the claims.

Relations between Khan — imprisoned since August 2023 (archive) on multiple charges — and Pakistan’s military leadership have been strained (archive) since before his April 2022 ouster. He has levelled (archive) serious allegations against the military, accusing former Army Chief Gen (r) Qamar Javed Bajwa of engineering (archive) his removal and his successor, Gen Munir, of “assassination attempt and cover-ups” (archive).

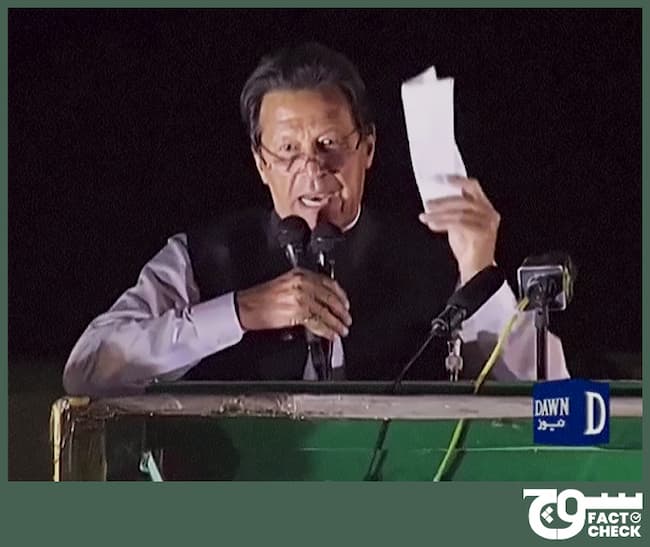

During one of his rallies in Islamabad on 27 March 2022, days before his ouster, Khan claimed he had evidence supporting his accusations. The proof was actually a diplomatic cable (archive) sent by Asad Majeed Khan, the then-Pakistani ambassador to the US, showing alleged interference from the State Department in his country’s affairs, he asserted. Later, this came to be known as the “Lettergate”, “Cipher-gate” or “Cable-gate” scandal.

The purported cable was sent after a conversation that took place on 7 March 2022 at the former ambassador’s farewell lunch. Officials from the State Department at the lunch reportedly included (archive) Assistant Secretary of State for its Bureau of South and Central Asian Affairs (SCA) Donald Lu and Deputy Assistant Secretary for Central Asian and Pakistan Affairs Lesslie C. Viguerie, whereas Deputy Chief of Mission Syed Naveed Bokhari and the defence attaché were in attendance from the Pakistani side.

At the time, Khan’s stance was that the US was angered by his 23 February 2022 visit (archive) to Russia on the eve of President Vladimir Putin’s invasion (archive) of Ukraine, as well as his refusal to condemn the war.

“Why would we condemn Russia? Are we your slaves that we would do whatever you say?” he said (archive) in his rallies, after Western officials urged his government to condemn Moscow’s move in Kyiv.

A part of these allegations (archive) stated that the US had expressed its desire for Khan to be removed from office so that its relations with Pakistan could smoothen out. If that did not happen, the claims added, the country would be forced into isolation.

Pakistan’s National Security Committee (NSC) had in March 2022 expressed “grave concern” (archive) over the alleged interference, with the Foreign Office saying démarches had been issued in this regard and Khan saying (archive) the official note was sent to “the American embassy”. The Foreign Ministry also summoned (archive) the then-acting US envoy to lodge a formal protest on 1 April 2022.

In a press conference, Maj Gen Babar Iftikhar, the director-general of the Pakistan Army’s media wing, the Inter-Services Public Relations (ISPR), said the military had conveyed its stance to the NSC and that the body’s statement did not mention the word “conspiracy”, according to a report (archive). A démarche, he added, was sent over the “interference” and “undiplomatic language” used by a State Department official.

In an August 2023 investigation (archive), American media outlet The Intercept claimed to have obtained the purported cipher through “an anonymous source in the Pakistani military”, mostly reiterating what had already been reported in the media and what the former prime minister had alleged throughout. The publication quoted State Department spokesperson Matthew Miller as saying the US “had expressed concern” about Khan’s Russia visit and “communicated that opposition both publicly and privately”.

Earlier, on 23 February 2022, the US had said that it was “aware” of Khan’s Moscow trip and “hoped” that “responsible” countries would express “concern” and “objection” to Russia’s war in Ukraine. In response to the conspiracy allegations, State Department spokespeople have issued denials multiple times in the past, alongside the usual bromides about democracy, rule of law, constitutional process, bilateral cooperation, and not having a position on any political candidate over another.

Elizabeth Horst — Principal Deputy Assistant Secretary and Deputy Assistant Secretary for Pakistan at the SCA — also termed (archive) the allegations against Lu as “categorically false”.

On 2 March, the US Senate Committee on Foreign Relations questioned (archive) Lu over Pakistan’s neutrality in the Ukraine conflict. This occurred just days before the aforementioned farewell lunch. The country abstained from voting in UN resolutions on the matter in both 2022 and 2023 (archived here and here, respectively).

On 27 March, Khan addressed the ‘Amar Bil Maroof’ rally in Islamabad and waved a letter (archive) in front of his supporters, saying it was proof of what he said was the “foreign-funded conspiracy” to topple him. A few days later, on 31 March, he identified (archive) the country in an apparent slip of the tongue, complaining that “America threatened me”.

In a bid to block the impending no-confidence motion against him, the then-premier dissolved (archive) the National Assembly on 3 April, sending lawmakers scrambling to the Supreme Court, which, on 7 April, ruled (archive) his move as illegal and restored the parliament. A day later, Khan announced (archive) that while he accepted the decision, he would not be okay with an “imported government”. On 10 April, he was eventually voted (archive) out of office.

On 22 April, the NSC concluded (archive) that “there has been no foreign conspiracy” to remove Khan. Months later, purported audio leaks (archived here and here, respectively) revealed that the PTI founder had allegedly decided to “play with” the cipher for political mileage in collaboration with his top aides. Soch Fact Check has not independently verified these reports.

A few months after his ouster, Khan appeared to hint at rekindling his relationship with the US, stating in a November 2022 interview (archive) that the cipher conspiracy “is over, it’s behind me”, and admitted that his Russia visit was “embarrassing”. However, a year later, on 19 July 2023, his principal secretary Azam Khan termed (archive) the scandal a “premeditated conspiracy”.

On 20 March 2024, Lu, the top State Department official, also testified (archive) in front of the House Foreign Affairs Committee’s (HFAC) Subcommittee on Middle East, North Africa, and Central Asia (MENACA). In his remarks — which can be heard at the 39:56 mark in this video (archive) available on the HFAC’s YouTube channel — he said, “These allegations, this conspiracy theory, is a lie, it is a complete falsehood. […] At no point does it [the purported cipher] accuse the United States government or me personally of taking steps against Imran Khan.”

Screenshot from HFAC’s YouTube video

The ex-premier and his ally, former Foreign Minister Shah Mehmood Qureshi, were sentenced (archive) to 10 years in jail in the cipher case on 30 January, the same day when he revealed (archive) that he had waved a “paraphrased copy” of the cable, not the real document, at his 2022 Islamabad rally and that he had never actually possessed it. He also mentioned that the conspiracy behind his removal “took place in October 2021”.

The conviction was struck down (archive) by an Islamabad court in June 2024 but challenged (archive) the same month by Pakistan’s Interior Ministry.

Recently, the Pakistani American Public Affairs Committee (PAKPAC) announced (archive) its endorsement of Donald Trump for the 2024 US elections. It also criticised Biden and Trump’s opponent, Vice President Harris, for allowing what it claimed was a “legislative coup” that resulted in Khan’s ouster.

There has been much discourse about alleged interference by the US in various countries, primarily in the Global South over the past decades, according to academics, analysts, essays, researches, opinion pieces, historical listicles, and books. There is inconclusive evidence either way in the case of Pakistan and Khan.

No call from Biden

Interestingly, during the course of his premiership, Khan and his aides appeared increasingly frustrated (archived here, here, and here, respectively) that Biden did not contact the Pakistani prime minister once since he was sworn into office on 20 January 2021; this attracted “considerable attention” (archive) during the US President’s first year.

Khan’s reservations were echoed (archive) by US Senator Lindsey Graham in June 2021; however, the PTI founder later started to dial down the issue, saying Biden had “other priorities” (archive), was “busy” (archive), and that a phone call or lack thereof was the US President’s “business” (archive).

In a 27 September 2021 press briefing, former White House Press Secretary Jen Psaki responded (archive) to a question about whether Biden would call Khan soon, saying, “I don’t have anything to predict at this point in time.”

Reports have suggested that officials in the Khan administration tried multiple times (archived here and here, respectively) to get a call from the US president.

After his ouster, Khan once again complained (archive) about the lack of a call from Biden and instead praised the Trump administration.

Imran Khan’s Facebook page

“I had [a] perfectly good relationship with the Trump administration. It’s only when the Biden administration came, and it coincided with what was happening in Afghanistan. And for some reason, which I still don’t know, I never, they never got in touch with me,” he was quoted as saying in the May 2022 interview.

Imran Khan’s imprisonment

Khan was first detained (archive) on 9 May 2023 over charges of corruption (archive), with his detention triggering violent, country-wide protests. While he was released (archive) a few days later after the Supreme Court’s intervention, he was arrested (archive) and imprisoned for a second time on 5 August 2023, after a court ruled his “dishonesty [was] established beyond doubt” in the Toshakhana case.

The former PM has been incarcerated since then. In January 2024, just a month ahead of the general elections in Pakistan, he was sentenced for a total of 31 years over charges of corruption, leaking state secrets, and an un-Islamic marriage, according to these reports (archived here and here).

Khan was seen for the first time (archive) since his incarceration in an apparently leaked photo from the 16 May 2024 proceedings, when he was allowed to appear in the Supreme Court via a video link. A day prior, the PTI leader was granted (archive) bail in a corruption case. Earlier, the sentences handed down in two of the four cases he was convicted in were also suspended; one of them (archive) was the Toshakhana case. However, he would not be released from jail, his lawyer had said (archive).

In July, it was reported that Khan was acquitted in two other cases (archived here and here) by Pakistani courts.

Various groups have talked about the PTI founder’s continued detention. The Human Rights Commission of Pakistan (HRCP) expressed concerns (archive) over jail conditions and Amnesty International said (archive) it “found several fair trial violations under international human rights standards”, terming his imprisonment “arbitrary detention”.

The UN Working Group on Arbitrary Detention (WGAD) has said in an opinion (archive) that Khan’s detention is “arbitrary and in violation of international law”, according to a report (archive).

One of the cases against him, in which he was eventually acquitted, was about his marriage that was alleged to be “un-Islamic”; it was denounced by religious leaders and feminist groups (archived here, here, and here, respectively).

Fact or Fiction?

Soch Fact Check first searched for any reports on the matter published by credible media outlets but did not find one. In particular, we could not find any instance where Biden mentioned Imran Khan by name, whether in interviews, public speeches or statements.

The audio in question appeared suspicious with its semantic and grammatical errors and its tone, which is unlike the diplomatic language used by leaders of states. We also suspected that it was likely engineered through artificial intelligence (AI) or created by splicing.

Incidentally, in 2022, social media users had falsely claimed that Biden celebrated Khan’s removal from office. Soch Fact Check had investigated it then and found that the US President was actually celebrating jurist Ketanji Brown Jackson’s confirmation to the US Supreme Court, making her the first Black woman to serve as a justice in over 200 years.

When asked for a comment, PolitiFact Editor-in-Chief Katie Sanders said, “It does not sound authentic at all; the audio is weirdly sped up in places.”

Audio engineer’s analysis

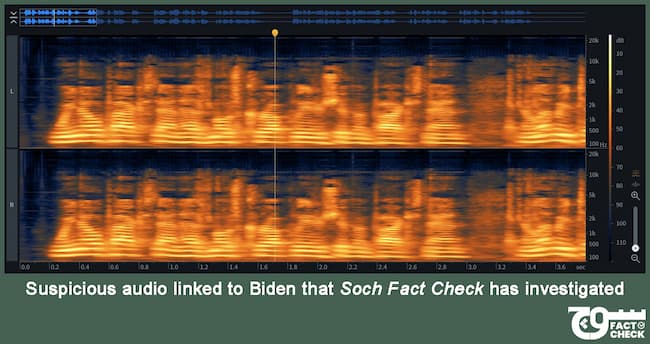

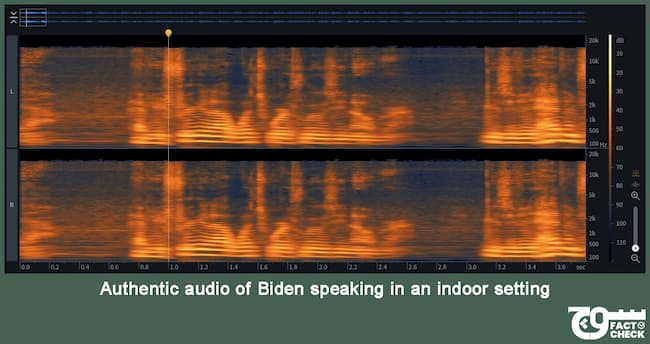

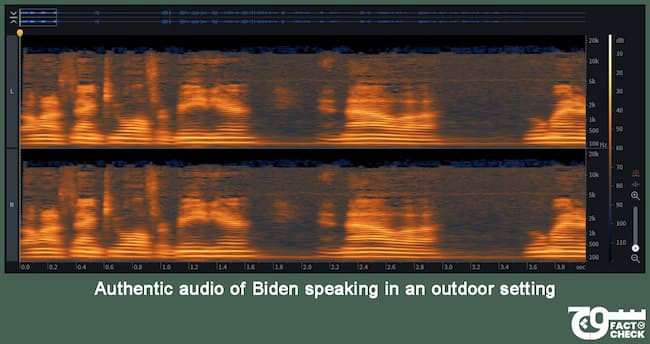

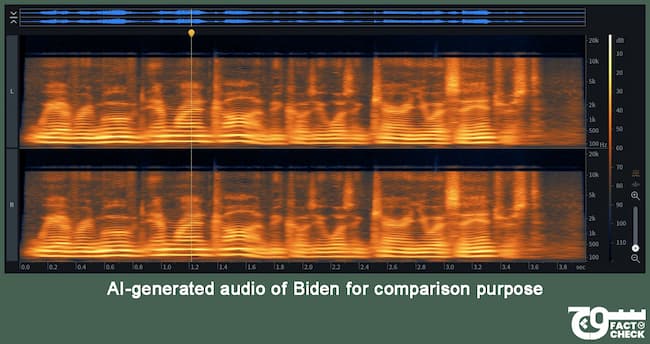

Soch Fact Check spoke to Shaur Azher, a lecturer at the Karachi University (KU) and Shaheed Zulfikar Ali Bhutto Institute of Science and Technology (SZABIST) and an audio engineer at Soch Videos, our sister company, who specialises in sound design and mixing and mastering audio. After analysing the clip and comparing it with authentic videos of Biden publicly speaking indoors and outdoors, he concluded that it was most likely created using AI.

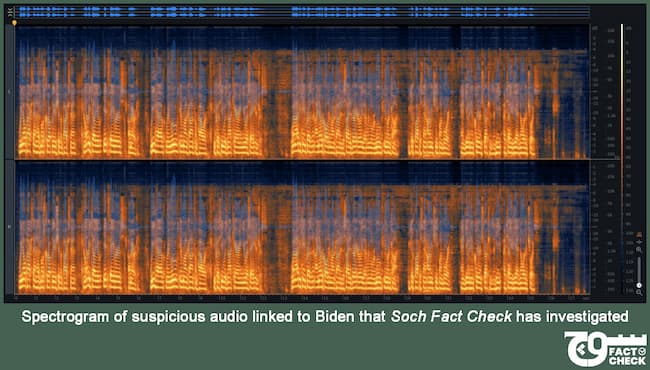

Azher himself generated an audio of Biden using AI for a better comparison and performed spectrogram and voice biometrics analyses. The former is a process that involves breaking down an audio into its frequency components to create a visualisation, which then allows for detection of anomalies that indicate tampering or synthetic generation. The latter, on the other hand, compares a suspicious audio to authentic samples of the original speaker and evaluates factors such as pitch, rhythm, and speech patterns to identify possible discrepancies that suggest manipulation.

According to his analysis, the lower frequencies — between 100 and 2000 Hz — are consistent and do not vary in the clip we are investigating; this should not occur in an authentic recording. In addition, at least five instances of crossfades indicate that multiple audio snippets were combined, with music added later on.

Moreover, unlike genuine recordings of Biden, which feature natural breathing spaces in human speech as visible on a spectrogram, the audio in question and the AI-generated voice exhibit merged frequencies and lacked such pauses.

Analysis by AI, linguistic experts

Soch Fact Check reached out to the Deepfakes Rapid Response Force, an initiative by the human rights nonprofit WITNESS, to help analyse the audio in question.

“One team analysed the audio using a speaker verification tool and compared it with eight verified samples of Biden. They concluded that it was not generated using AI. However, due to poor grammar and an unnatural cadence, it is possible that the video was spliced and edited. Unfortunately, the presence of music in the background makes it impossible to determine this with certainty,” the Force said in an emailed response.

Any fake audio or video designed using generative AI for the purpose of imitating real people is defined as a deepfake. The technology works by using machine learning (ML), which teaches an algorithm to recognise and replicate the distinct patterns and features within a dataset — for example, the video or audio of a real individual — enabling it to generate original sound or visuals. This is usually done without the consent of the person whose voice is being mimicked.

We also sent the audio for analysis to Professor Dr Hany Farid, a faculty member of the UC Berkeley’s School of Information and Department of Electrical Engineering and Computer Sciences whose research focuses on digital forensics and misinformation, among other topics.

In an emailed response, Prof Farid — who is also a member of the Berkeley Artificial Intelligence Research (BAIR) Lab — said, “I’ve analyzed this audio with a model we trained to distinguish real from AI-generated voices. This model classifies this record as AI-generated. In addition, this audio has the tell-tale signs of being AI-generated in terms of cadence and intonation.”

Soch Fact Check also spoke with Dr Catalin Grigoras, an associate professor and director of the National Center for Media Forensics (NCMF), part of the Music & Entertainment Industry Studies Department at the University of Colorado Denver’s College of Arts & Media.

In an emailed response, he said the forensic analysis showed that the audio in question “contains traces of AI” and “is consistent with an AI-generated voice”.

We also contacted Dr Paul Foulkes, a professor of linguistics and phonetics at the University of York’s Department of Language and Linguistic Science, for his analysis of Biden’s alleged speech in the audio in question compared to his authentic speeches and statements.

An expert who works on phonology, language variation and change, and forensic phonetics, Dr Foulkes said in an emailed response, “Even the linguistic and phonetic information reveals that this is clearly not an authentic recording.” The four examples he analysed from the transcription are mentioned below:

- “We know Pakistan has ø most corrupt leadership in Pakistan”

- “I never called Imran Khan and decided very early that we’ll remove Imran Khan [*him]”

- “We took ø Pakistan army chief on board…”

- “…and given [*gave] him clear instructions”

“In examples 1 and 3, the article is missing, indicated by ø. In standard US English, it would be mandatory to include articles in this position. In example 1, we would expect the, and in example 2, we would expect a or the,” depending on whether it’s referring to a single army chief or more than one, Dr Foulkes wrote.

“The examples are semantically and/or syntactically incoherent and extremely unlikely to be produced by a speaker of standard English, especially one who is very careful with his words,” he explained.

The professor added, “In example 1 — Pakistan has X in Pakistan — the repetition of Pakistan makes no sense (cf. …in Asia or …the world). Example 2 repeats Imran Khan. While this is a plausible sentence on the face of it, a more likely version would have him in the final position. The artificiality of the example is revealed by the inappropriate intonation used; a natural production of the words used would be most likely to place the stress on the second syllable of remove. Here, the stress falls on Imran. The timing is also unusual, with no pause or intonation juncture between the two clauses of the sentence (I never called Imran Khan || and decided very early…).”

In the third and fourth examples, “the form of the verb to give is ungrammatical in context; gave is expected here for a standard English speaker”, Dr Foulkes noted, adding, “In standard English, the clauses would need to be connected either by ‘took…gave’ or ‘have/had taken… [have/had] given’.”

“To find any one of these grammatical issues in a statement by a standard English speaker would be possible, perhaps, as a slip of the tongue. To find all of them within 30 seconds, especially in the speech of such a carefully controlled speaker, very strongly suggests that this recording is not authentic,” he concluded.

Results from AI-detection tools

It is unlikely for human ears to perfectly detect the subtle signs of manipulation and inconsistencies in an audio. This is also confirmed by a University College London (UCL) study (archive) — published 2 August 2023 in the peer-reviewed journal PLOS One — which involved running experiments in English and Mandarin and “found that detection capability is unreliable”. The research noted that “humans were only able to detect artificially generated speech 73% of the time”.

Deepfake detection tools, on the other hand, process samples differently from a human’s listening abilities and examine samples for anomalies, such as absent frequencies that may be produced from artificially generated sounds, instead. These systems also concentrate on specific features of speech, such as the speaker’s breathing patterns and the variations in their voice pitch.

Therefore, to further analyse the audio in question, we employed two AI and manipulation detection tools and used the following three samples as inputs: the direct link to the Facebook post in question, its audio file separated from the video, and a cleaner version of it.

The first is TrueMedia.org, a free product “to quickly and effectively detect deepfakes” and that “detects if there are traces that audio has been manipulated or cloned”, according to its website. The nonprofit that created the tool was founded by Dr Oren Etzioni, Professor Emeritus at the University of Washington and the founding CEO at the Allen Institute for AI (AI2).

Testing all three samples on TrueMedia.org revealed “substantial evidence of manipulation”, particularly in semantic and voices. It detected AI-generated audio in the first one with 100% confidence. The results are publicly available and can be viewed here, here, and here.

As we progressed from the first to the third audio samples, we found that the Audio Authenticity Detector — which looks for evidence of whether a sample was created by an AI generator or cloning — progressed from 65% confidence to 67% and eventually to 79%.

The Voice Anti-Spoofing Analysis — which only focuses on evidence of whether an audio sample was created by an AI generator, not cloning — yielded 49% confidence for both the Facebook post and the audio file, but shot up to 100% when inspecting the cleaner version.

The Voice Biometric and Voiceprinting Analysis — which also examines if audio was created by an AI generator or cloning and only works for audio, not video — turned up 92% and 95% confidence for the audio file and its cleaner version, respectively.

Lastly, the Audio Transcript Analysis — which uses semantic understanding of speech for detection — also revealed substantial evidence of manipulation.

TrueMedia.org also provided us with a textual analysis that it compiled using AI for each of the three inputs mentioned above. The most accurate analysis for the third file reads, “The transcript presents a highly controversial and unlikely scenario where a US president openly admits to orchestrating the removal of a foreign leader, Imran Khan, with the cooperation of the Pakistan Army Chief. Such a statement would be diplomatically explosive and is unlikely to be made publicly by any sitting president. The language used is informal and lacks the diplomatic tone typically expected in official statements. Additionally, the content seems exaggerated and implausible, suggesting it is fabricated or taken out of context.”

The second tool we tested is DeepFake-O-Meter, developed by the University at Buffalo’s Media Forensics Lab (UB MDFL). Of the available detectors, we used three, namely LFCC-LCNN, RawNet3, and AASIST. They revealed that the probability of the audio in question being fake was 93.42%, 93.04%, and 99.5%, respectively. The results are available here, here, and here; however, only those who have signed up can view them.

We subsequently reached out to UB MDFL Director Dr Siwei Lyu, the principal investigator (PI) of the DeepFake-O-Meter and a professor at the university’s Department of Computer Science and Engineering.

Dr Lyu explained that once a user uploads their sample and selects a detector, “containerized detection algorithms process the sample on the backend” before presenting the results. LFCC, or Linear Frequency Cepstral Coefficients, is a feature that performs voice analysis, while LCNN, which stands for Lightweight Convolutional Neural Network, is a machine learning model that acts as a classifier. When combined, they conduct audio deepfake detection, he said.

On the other hand, RawNet3, the professor said, “is a different classification model based on MFCC [Mel Frequency Cepstrum Coefficient] features”, whereas AASIST, or Audio Anti-Spoofing using Integrated Spectro-Temporal Graph Attention Networks, “is a recent audio deepfake detection method”. The choice of the three detectors used together by Soch Fact Check “effectively cover a range of audio manipulation types, making them a strong choice”, he noted.

When Dr Lyu analysed the audio samples we sent to him, both the original and its clean version, he said, “The one without music has a lot of cracking and buzzing noises in the background. These could be the artifacts due to the AI generation model, as similar artifacts have been observed in previous audio deepfakes. So, combining the algorithmic and manual analysis, I will say this sample is likely to be created from AI models.”

Artefacts, or sonic artefacts, may be defined as undesired, unwanted sounds or audible distortions produced accidentally from the recording equipment or process but can also be a result of editing or manipulation. However, many of these artefacts are “more challenging to describe, as many features are not interpretable”, which is why Dr Lyu said he does not solely rely on algorithmic analysis.

Dr Lyu, as well as Manjeet Rege, the director of the University of St. Thomas’ Center for Applied Artificial Intelligence, have previously told Poynter (archive) that people need to “watch for signs of AI-generated audio, including irregular or absent breathing noises, intentional pauses and intonations, along with inconsistent room acoustics”.

Meanwhile, Soch Fact Check reached out to the White House and the US Department of State in this regard. The latter referred us to the former for a comment. This article will be updated if and when we receive a response.

Online deepfake tools, Biden robocalls

It is important to note that a simple Google search for “biden voice generator” led Soch Fact Check to quick tools, such as Parrot AI, FineVoice, Jammable, and TopMediai, among others, that allow anyone to create an audio using Joe Biden’s voice.

This indicates how easy it is to fake any public figure’s voice, either by converting one’s own voice to that of the selected person or by the text-to-speech (TTS) method, where phrases used in the input are converted to the voice of the individual of choice.

It is also noteworthy that in January 2024, robocolls (archive) imitating Biden’s voice targeted (archive) “between 5,000 and 25,000 voters” ahead of the New Hampshire primary, a process (archive) through which presidential nominees are chosen in the US.

Interestingly, the robocalls in question used “What a bunch of malarkey”, a phrase that is “characteristic of the 81-year-old president” (archive). The fine proposed in the case is the US Federal Communications Commission’s (FCC) first one (archive) “involving generative AI technology”.

Political consultant Steven Kramer (archive), who was identified as the man behind the robocalls, had reportedly hired street magician Paul Carpenter (archive) to create Biden’s AI voice. The former — who faces over two dozen criminal charges — could also be fined up to USD 6 million, whereas the company that transmitted the robocalls, Lingo Telecom, has agreed (archive) to pay a USD 1 million fine.

AI in Pakistan’s legislature

We could not confirm who created the audio, or if they are of Pakistani origin; the country currently has no laws regarding the use of AI.

In September 2024, the Regulation of Artificial Intelligence Bill, 2024 (archive), submitted by Pakistan Muslim League-Nawaz (PML-N) lawmaker Afnan Ullah Khan, was introduced in the Senate. The draft legislation proposes the establishment of a National Artificial Intelligence Commission and fines of PKR 1.5-2.5 billion for violations.

According to a summary (archive) on the Senate’s website, the bill has been referred to the Standing Committee on Information Technology and Telecommunication.

Prior to that, the Ministry of Information Technology and Communication uploaded (archive) the draft of a National Artificial Intelligence Policy (archive) in 2023 for consultation with relevant stakeholders and set up (archive) a relevant committee in this regard. The Digital Rights Foundation (DRF) submitted feedback the same year.

Conclusion

Soch Fact Check conducted rigorous deepfake tests, spoke with experts in digital forensics, AI, misinformation, audio engineering, and linguistics and phonetics, and interviewed a sound engineer to ascertain that the audio of Biden’s alleged confession is most likely AI-generated or spliced. A lack of any credible reporting in this regard further raises questions on its authenticity. That its release was perfectly timed just weeks before the 2024 US elections also reaffirms our conclusion that it is fake.

Images in cover photo: joebiden and ImranKhanOfficial

To appeal against our fact-check, please send an email to appeals@sochfactcheck.com