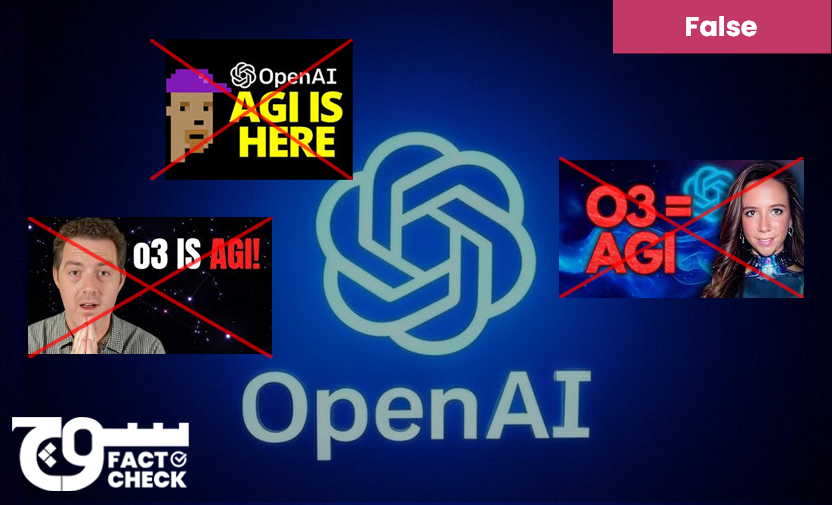

Claim: Open AI’s new model O3 will change everything since artificial general intelligence (AGI) is finally here.

Fact: Open AI’s new reasoning model O3 is not equivalent to achieving AGI.

On 6 December 2024, OpenAI launched a 12-day event called “12 Days of OpenAI”. Each day of the event, the company introduced a new feature or update to their AI-powered products.

On the last day, they introduced OpenAI’s most recent reasoning model called o3, and its lighter version, o3 mini. However, within a few days of the news, several social media influencers and AI enthusiasts began claiming that AGI has been reached.

Fact or Fiction?

Open AI’s new o3 model has not achieved AGI. However, to truly grasp what that statement means, we must explain what it really is and what it means for AI advancement to achieve AGI.

What is the o3 reasoning model?

OpenAI has recently introduced its latest AI model, o3, designed to enhance reasoning capabilities beyond its predecessor, o1. The o3 model excels in complex tasks, including advanced coding, mathematics, and science, by employing a step-by-step logical approach known as “private chain of thought” reasoning. This method allows the model to plan and deliberate internally before generating responses, leading to more accurate and reliable outputs.

As far as the ARC-AGI benchmark is concerned, o3 achieved three times the accuracy of o1 on this benchmark, which assesses an AI’s ability to handle novel and challenging mathematical and logical problems. Early evaluations show o3 achieving a 2727 rating on Codeforces programming contests and scoring 96.7 percent on AIME 2024 mathematics problems.

OpenAI has also introduced the o3-mini, a lighter and faster version of the o3 model, catering to applications requiring reduced computational resources.

At this time, both o3 and o3-mini are undergoing testing and are not publicly available. OpenAI has invited safety and security researchers to apply for early access until January 10, 2025, with plans to release o3-mini to the public in January 2025

These advancements underscore OpenAI’s commitment to developing AI systems with improved reasoning abilities and safety measures, contributing to the broader goal of achieving artificial general intelligence (AGI).

However, experts caution that while o3 represents significant progress, AGI remains a distant objective, and ongoing research is essential to address the current limitations of AI reasoning models.

What is Artificial General Intelligence (AGI)?

Artificial General Intelligence (AGI) refers to a class of artificial intelligence systems capable of performing any intellectual task that a human being can do. Unlike specialised AI, which is designed for narrow applications such as image recognition or language translation, AGI can generalise knowledge across domains, adapt to novel situations, and exhibit cognitive flexibility akin to human reasoning.

The goal of AGI research is to create systems that not only solve predefined problems but also understand, learn, and innovate autonomously across diverse contexts. The development of AGI poses profound ethical and societal challenges. These include concerns about job displacement, decision-making accountability, and the potential for AGI systems to surpass human intelligence in unpredictable ways.

Researchers like Stuart Russell emphasise the need for alignment between AGI systems and human values to mitigate risks.

Key characteristics of AGI:

- Cognitive Flexibility: AGI systems can transfer knowledge and skills from one domain to another without significant retraining.

- Autonomous Learning: They possess the ability to learn from experience, similar to how humans continuously acquire and refine knowledge.

- Contextual Understanding: AGI can understand complex, ambiguous, and nuanced scenarios, making it versatile in problem-solving.

- Self-awareness: While not a strict requirement, some theorists propose that AGI could achieve self-awareness or possess intrinsic goals, enhancing its decision-making processes.

AGI represents the pinnacle of AI research, aiming to build machines with general-purpose intelligence comparable to or surpassing human capabilities.

As the field progresses, a multidisciplinary approach involving computer science, neuroscience, ethics, and philosophy will be crucial to navigating the technical challenges and societal implications of this transformative technology.

What are the experts saying?

François Chollet, the creator of the ARC-AGI benchmark, has weighed in on this development. He cautions against equating the o3 model’s performance with the attainment of AGI, stating, “Passing ARC-AGI does not equate to achieving AGI, and, as a matter of fact, I don’t think o3 is AGI yet.” Chollet points out that o3 still fails on some straightforward tasks, indicating fundamental differences from human intelligence.

Other AI experts such as Don Lim share similar reservations. While acknowledging o3’s advancements, he emphasises that excelling in specific benchmarks does not necessarily mean an AI system possesses general intelligence.

In his Medium post, AI researcher Lim explicitly writes, “OpenAI-o3 is NO AGI”, he explains how OpenAI themselves did not claim that it was AGI, and he further elaborates on seven different reasons why he does not think o3 can be classified as AGI.

The consensus is that, although o3 represents a significant milestone, it remains a specialised tool rather than a truly general intelligence.

Virality

On Facebook, we found the claim shared here, here, here, here, here, here, here, here, here, here, here, here, here, here, here, here.

We also found that it was shared on Instagram here and here.

OpenAI is a pioneer in the field of AI models especially when it comes to large language models and any developments announced by the company are analysed and assessed by thousands of AI enthusiasts around the world.

Conclusion: OpenAI has not reached AGI although experts believe that the new reasoning model o3 is closer to AGI than earlier GPT models.

—

Background image in cover photo: Medium

To appeal against our fact-check, please send an email to appeals@sochfactcheck.com